Knime File Uploaded Does Not Show File Selector

Introduction

With the motility towards cloud and hybrid environments nosotros had to rethink and rewrite the existing file treatment infrastructure in KNIME Analytics Platform to provide our users with a ameliorate user feel.

With KNIME Analytics Platform release 4.three we introduced a new file handling framework thanks to which you can drift workflows between file systems or manage diverse file systems within the aforementioned workflow in a more user-friendly way.

In this guide the post-obit topics are covered:

-

Basic concepts apropos file systems

-

How to access different file systems from inside KNIME Analytics Platform

-

How to read and write from and to different file systems and conveniently transform and adapt your data tables when importing them into your workflow

-

The new Path blazon and how to employ it within the nodes that are build on the basis of the file treatment framework

-

Finally, in the Compatibility and migration section you lot will find more detailed information about:

-

How to distinguish between the onetime and new file treatment nodes

-

How to work with workflows that incorporate both old and new file handling nodes

-

How to migrate your workflows from erstwhile to new file handling nodes.

-

Basic concepts about file systems

In general, a file system is a procedure that manages how and where data is stored, accessed and managed.

In KNIME Analytics Platform a file system can be seen every bit a wood of trees where a binder constitutes an inner tree node, while a file or an empty folder are the leaves.

Working directory

A working directory is a binder used by KNIME nodes to disambiguate relative paths. Every file arrangement volition have a working directory whether information technology is explicitly configured or implicitly.

Path syntax

A path is a cord that identifies a file or folder position within a file system. The path syntax depends on the file organization, e.g. a Windows local file organisation might look like C:\Users\username\file.txt, while on Linux and near other file systems in KNIME Analytics Platform might look like /folder1/folder2/file.txt.

Paths can be distinguished in:

-

Accented: An absolute path uniquely identifies a file or folder. It starts always with a file system root.

-

Relative: A relative path does not identifies one particular file or folder. It is used to identify a file or folder relative to an absolute path.

KNIME Analytics Platform and file systems

Different file systems are available to use with KNIME Analytics Platform. Reader and writer nodes are able to work with all supported file systems.

File systems within KNIME Analytics Platform can be divided into two main categories:

-

Standard file systems

-

Connected file systems

Standard file systems

Standard file systems are available at whatsoever time meaning they exercise not need a connector node to connect to it.

Their working directory is pre-configured and does not demand to exist explicitly specified.

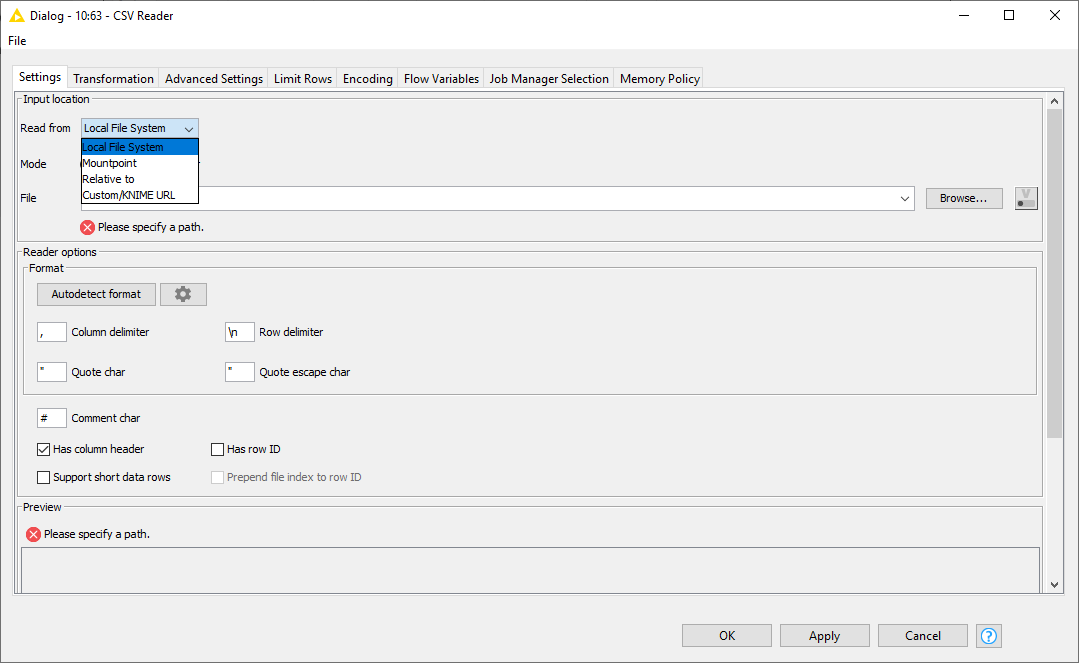

To apply a reader node to read a file from a standard file system drag and drop the reader node for the file type you want to read, eastward.k. CSV Reader for a .csv file, into the workflow editor from the node repository.

Right click the node and choose Configure… from the context carte du jour. In the Input location pane under the tab Settings yous tin choose the file system you lot desire to read from in a drop-down carte.

The following standard file systems are available in KNIME Analytics Platform:

-

Local file arrangement

-

Mountpoint

-

Relative to

-

Current workflow

-

Current mountpoint

-

Electric current workflow data surface area

-

-

Custom/KNIME URL

Local file organization

When reading from Local File Arrangement the path syntax to use will be dependent on the arrangement on which the workflow is executing, i.e. if Windows or UNIX operative organization.

The working directory will be implicit and will correspond to the system root directory.

| Yous can also have access to network shares supported by your operating system, via the local file organization within KNIME Analytics Platform. |

Please be enlightened that when executing a workflow on KNIME Server version iv.11 or higher the local file system access is disabled for security reasons. The KNIME Server administrators can actuate it, but this is not recommended. For more information about this please refer to the KNIME Server Administration Guide.

Mountpoint

With the Mountpoint selection you will have access to KNIME mount points, such as LOCAL, your KNIME Server mountain points, if whatever, and the KNIME Hub. You have to be logged in to the specific mountpoint to have access to information technology.

The path syntax volition exist UNIX-like, i.due east. /folder1/folder2/file.txt and relative to the implicit working directory, which corresponds to the root of the mountpoint.

Please note that workflows within the mountpoints are treated every bit files, so it is non possible to read or write files within a workflow.

Relative to

With the Relative to choice you volition accept admission to three dissimilar file systems:

-

Current mountpoint and Current workflow: The file system corresponds to the mountpoint where the currently executing workflow is located. The working directory is implicit and information technology is:

-

Current mountpoint: The working directory corresponds to the root of the mountpoint

-

Current workflow: The working directory corresponds to the path of the workflow in the mountpoint, eastward.thousand.

/workflow_group/my_workflow.

-

-

Current workflow information area: This file system is defended to and accessible by the currently executing workflow. Data are physically stored inside the workflow and are copied, moved or deleted together with the workflow.

All the paths used with the pick Relative to are of the blazon folder/file and they must be relative paths.

In the instance above you will read a .csv file from a folder data which is in:

<knime-workspace>/workflow_group/my_workflow/data/ Please note that workflows are treated every bit files, so information technology is non possible to read or write files within a workflow.

When the workflow is executing on KNIME Server the options Relative to → Current mountpoint or Current workflow volition access the workflow repository on the server. The option Relative to → Electric current workflow data area, instead, will access the data expanse of the job copy of the workflow. Please be aware that files written to the data area will be lost if the job is deleted.

Custom/KNIME URL

This option works with a pseudo-file system that allows to admission single files via URL. It supports the following URLs:

-

knime:// -

http(s)://if authentication is non needed -

ssh://if authentication is non needed -

ftp://if hallmark is not needed

For this option, you can also set up manually a timeout parameter (in milliseconds) for reading and writing.

The URL syntax should be as follows:

-

scheme:[//dominance]path[?query][#fragment] -

The URL must be encoded, e.g. spaces and some special characters that are reserved, every bit

?. To encode the URL y'all tin use whatsoever available online URL encoder tool.

Using this pick you can read and write single files, but you would non be able to move and copy files or folders. Nevertheless, listing files in a binder, i.e. browsing, is not supported.

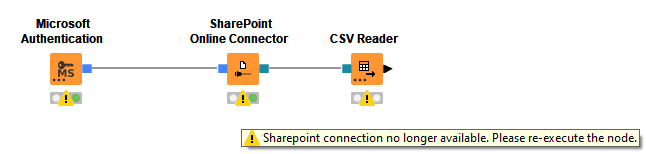

Connected file systems

Continued file systems instead require a connector node to connect to the specific file system. In the connector nodes configuration dialog information technology is possible to configure the most convenient working directory.

The file system Connector nodes that are available in KNIME Analytics Platform can be divided into two primary categories:

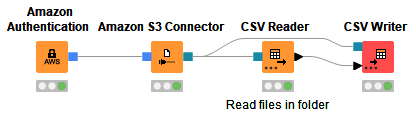

Connector nodes that need an Authentication node:

-

Amazon S3 Connector node with Amazon Authentication node

-

Google Deject Storage Connector node with Google Authentication (API Key) node

-

Google Drive Connector node with Google Authentication node

-

SharePoint Online Connector node with Microsoft Hallmark node

-

Azure Blob Storage Connector node with Microsoft Hallmark node

-

Azure Data Lake Storage Gen2 node with Microsoft Authentication node

Connector nodes that do non demand an Authentication node:

-

Databricks File System Connector node

-

HDFS Connector node

-

HDFS Connector (KNOX) node

-

Create Local Bigdata Environment node

-

SSH Connector node

-

HTTP(Due south) Connector node

-

FTP Connector node

-

KNIME Server Connector node

File systems with external Authentication

The path syntax varies co-ordinate to the connected file system, merely in most cases information technology will exist UNIX-like. Information on this are indicated in the corresponding Connector node descriptions.

Typically in the configuration dialog of the Connector node y'all will be able to:

-

Set upward the working directory: In the Settings tab type the path of the working directory or browse through the file organization to set up one.

-

Set up the timeouts: In the Avant-garde tab set upwards the connection timeout (in seconds) and the Read timeout (in seconds).

Most connectors will require a network connectedness to the respective remote service. The connection is and so opened when the Connector node executes and airtight when the Connector node is reset or the workflow is closed.

It is important to notation that the connections are not automatically re-established when loading an already executed workflow. To connect to the remote service you lot volition so demand to execute once more the Connector node.

Amazon file arrangement

To connect to Amazon S3 file organization you will need to use:

-

Amazon Hallmark node

-

Amazon S3 Connector node

Amazon S3 file organization normalizes paths. Amazon S3 allows paths such as /mybucket/.././file, where ".." and "." must non be removed during path normalization because they are part of the name of the Amazon S3 object. When such a case is present you will need to uncheck Normalize paths option from the Amazon S3 Connector node configuration dialog.

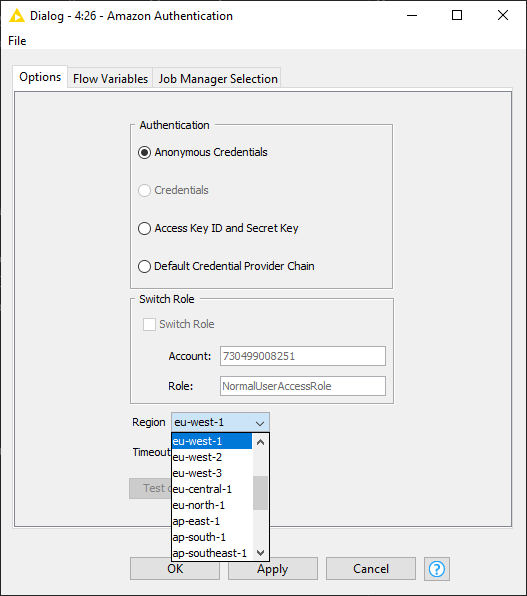

Delight be enlightened that each saucepan in Amazon S3 belongs to an AWS region, due east.g. european union-due west-1. To admission the bucket the client needs to be connected to the aforementioned region. You lot can select the region to connect to in the Amazon Authentication node configuration dialog.

Google file systems

We support two file systems related to Google. Notwithstanding, even though they both belong to Google services the corresponding Connector nodes use a different authentication type, and hence Authentication node.

To connect to Google Cloud Storage you volition demand to use:

-

Google Cloud Storage Connector node

-

Google Authentication (API Central) node

Also the Google Cloud Storage Connector node, equally the Amazon S3 Connector node, normalizes the paths.

The specific Google API yous desire to use has to be enabled under APIs. |

Later you create your Service Business relationship you lot will receive a p12 central file to which yous will need to signal to in the Google Hallmark (API Key) node configuration dialog.

To connect to Google Drive, instead, you will need to use:

-

Google Drive Connector node

-

Google Authentication node

The root binder of the Google Drive file organization, contains your Shared drivers, in case any is available, and the binder My Bulldoze. The path of your Shared drivers will and so be /shared_driver1/, while the path of your folder My Drive will be /My Bulldoze/.

Microsoft file systems

We support three file systems related to Microsoft.

To connect to SharePoint Online, Azure Hulk Storage, or to Azure Data Lake Storage Gen2, you will demand to use:

-

SharePoint Online Connector node, or Azure Blob Storage Connector node, or Azure ADLS Gen2 Connector node

-

Microsoft Authentication node

The SharePoint Online Connector node connects to a SharePoint Online site. Here document libraries are represented every bit acme-level folders.

In the node configuration dialog you lot can choose to connect to the following sites:

-

Root Site: Root site of the your organisation

-

Web URL: https URL of the SharePoint site (same as in the browser)

-

Group site: Group site of a Office365 user grouping

-

Subsite: Connects to subsite or sub-sub-site of the above

The path syntax volition be UNIX-like, i.e. /mycontainer/myfolder/myfile and relative to the root of the storage. As well Azure Hulk Storage Connector node performs paths normalization.

The Azure ADLS Gen2 Connector node connects to an Azure Blob Storage file organization.

The path syntax will be UNIX-like, i.e. /mycontainer/myfolder/myfile and relative to the root of the storage.

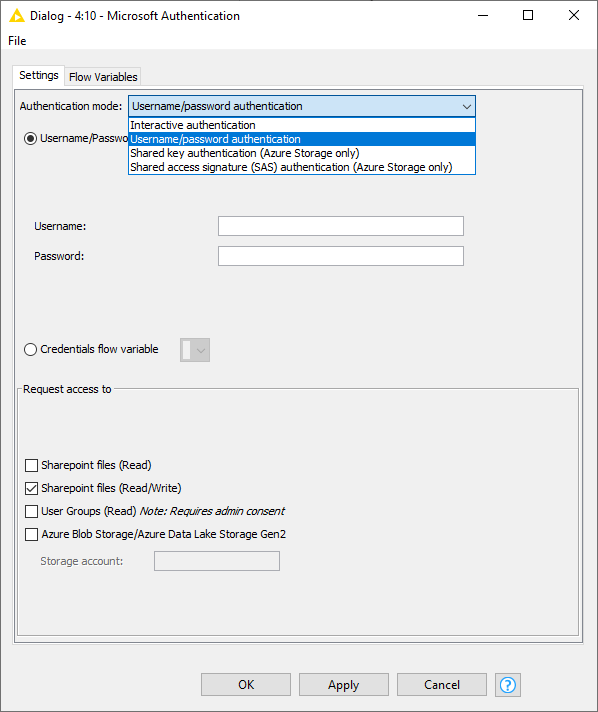

The Microsoft Authentication node offers OAuth authentication for Azure and Office365 clouds.

It supports the following authentication modes:

-

Interactive authentication: Performs an interactive, web browser based login by clicking on Login in the node dialog. In the browser window that pops up, you may be asked to consent to the requested level of access. The login results in a token being stored in a configurable location. The token volition exist valid for a certain amount of time that is defined by your Azure AD settings.

-

Username/countersign authentication: Performs a not-interactive login to obtain a fresh token every time the node executes. Since this login is not-interactive and you become a fresh token every fourth dimension, this fashion is well-suited for workflows on KNIME Server. Even so, it also has some limitations. Commencement, you cannot consent to the requested level of access, hence consent must begiven beforehand, e.yard. during a previous interactive login, or by an Azure Advert directory admin. 2d, accounts that require multi-factor authentication (MFA) will not work.

-

Shared central hallmark (Azure Storage only): Specific to Azure Blob Storage and Azure Data Lake Storage Gen2. Performs authentication using an Azure storage business relationship and its cloak-and-dagger key.

-

Shared admission signature (SAS) authentication (Azure Storage but): Specific to Azure Blob Storage and Azure Data Lake Storage Gen2. Performs hallmark using shared access signature (SAS). For more details on shared access signatures see the Azure storage documentation.

File systems without external Authentication

All the Connector nodes that do not demand an external Hallmark node will connect upon execution to a specific file system. This allows the downstream nodes to access the files of the remote server or file system.

KNIME Server Connector node

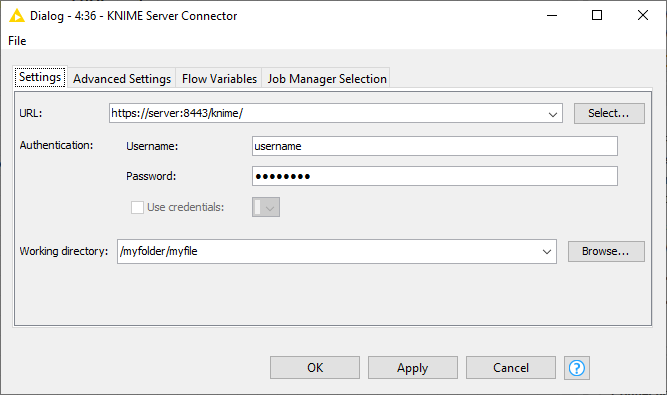

With the KNIME Server Connector node yous are able to connect to a KNIME Server instance.

When opening the KNIME Server Connector node configuration dialog you will be able to either blazon in the URL of the KNIME Server you lot want to connect to, or to Select… it from those available amid your mountpoints in KNIME Explorer. You lot do not need to have the KNIME Server mountpoint set up or already connected to utilize this node.

Yous can authenticate either past typing in your username and password or by using the selected credentials provided past period variable, if whatsoever is available. Pleas be aware that when authenticating typing in your username and password the password will be persistently stored in an encrypted grade in the settings of the node and will be then saved with the workflow.

SMB Connector node

With SMB Connector node you can connect to a remote SMB server (due east.g. Samba, or Windows Server). The resulting output port allows downstream nodes to admission the files in the connected file system.

This node by and large supports versions 2 and 3 of the SMB protocol. It besides supports connecting to a Windows DFS namespace.

When opening the SMB Connector node configuration dialog you can choose to connect to a File server host or a Windows Domain. Choosing File server specifies that a direct connection shall be made to access a file share on a specific file server. A file server is any motorcar that runs an SMB service, such as those provided by Windows Server and Samba.

Choosing Domain specifies that a connection shall be made to access a file share in a Windows Active Directory domain.

Read and write from or to a connected file system

When yous successfully connect to a continued file organisation you lot volition be able to connect the output port of the Connector node to any node that is developed under the File Handling framework.

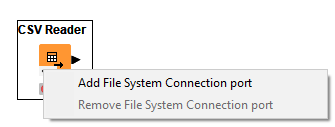

To do this you volition need to activate the corresponding dynamic port of your node.

| Yous can besides enable dynamic ports to connect to a continued file system in Utility nodes. |

To add a dynamic port to i of the node where this pick is available, right-click the three dots in the left-bottom corner of the node and from the context menu choose Add File Organisation Connectedness port.

Reader nodes

A number of Reader nodes in KNIME Analytics Platform are updated to work within the File Handling framework.

| Meet the How to distinguish between the old and new file handling nodes section to learn how to identify the reader nodes that are compatible with the new File Handling framework. |

Yous can use these Reader nodes with both Standard file systems and Continued file systems. Moreover, using the File Organization Connection port you tin easily switch the connectedness between different continued file systems.

In the post-obit example a CSV Reader node with a File System Connection port is continued to an Azure Blob Storage Connector node and information technology is able to read a .csv file from an Azure Hulk Storage continued file organization. Exchange the connexion with any of the other Connector nodes, i.due east. Google Bulldoze and SharePoint Online, will allow to read a .csv file from the other file systems.

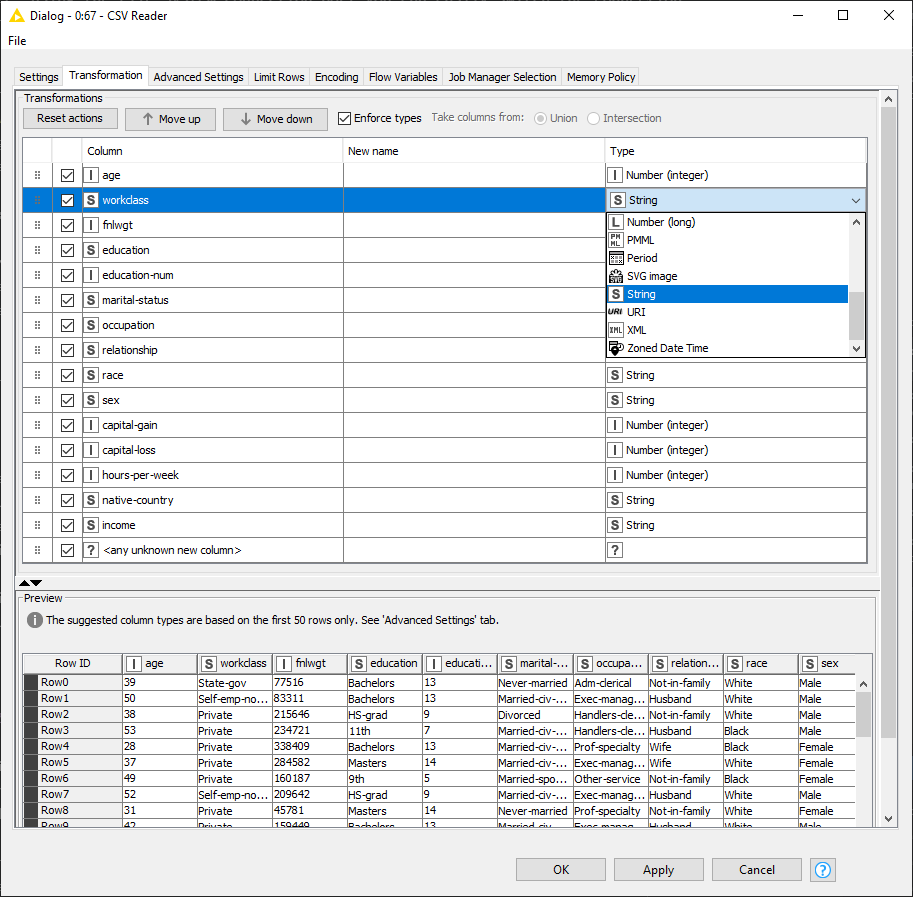

Transformation tab

The new table based reader nodes due east.k. Excel Reader or CSV Reader allow you to transform the data while reading it via the Transformation tab. To do so the node analyzes the construction of the file(southward) to read in the node dialog and stores the file structure and the transformation that should be applied. When the node is executed this data is used to read the data and apply the transformations before creating the KNIME information tabular array. This allows the nodes to return a tabular array specification during configuration of the node and non just once the node is executed, assuming that the file structure does not change. The benefits of this is that you can configure downstream nodes without executing the reader node first and improves execution speed of the node.

| To speedup the file analysis in the node dialog by default the reader node only reads a limited corporeality of rows which might atomic number 82 to mismatching blazon exceptions during execution. To increase the limit or disable information technology completely open the Advanced Settings tab and go to the Table specification department. |

To change the transformation in the updated reader nodes configuration dialog, go to the Transformation tab afterward selecting the desired file. This tab displays every cavalcade as a row in a table that allows modifying the construction of the output table. It supports reordering, filtering and renaming columns. It is likewise possible to alter the blazon of the columns. Reordering is done via drag and drop. Only drag a cavalcade to the position it should accept in the output table. Annotation that the positions of columns are reset in the dialog if a new file or folder is selected.

If you are reading multiple files from a folder, i.e. with the pick Files in binder in the Settings tab, you can also cull to take the resulting columns from Wedlock or Intersection of the files you are reading in.

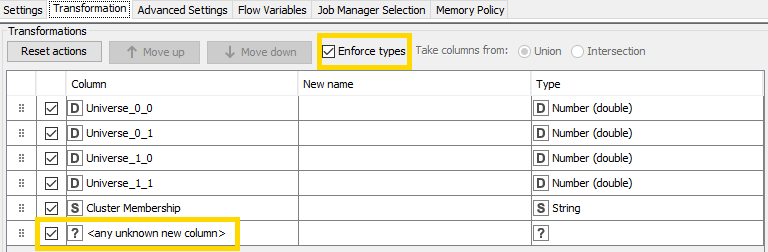

The Transformation tab provides two options which are important when dealing with changing file structures if you control the reader via period variables.

The Enforce types option controls how columns whose type changes are dealt with. If selected, the node attempts to map to the KNIME blazon you configured in the transformation tab and fails if that is non possible. If unselected, the KNIME type respective to the new type is used whether it is compatible to the configured blazon or not. This might cause downstream nodes to fail if the blazon of a configured cavalcade has changed.

The <any unknown new column> option is a place holder for all previously unknown columns and specifies whether and where these columns should exist added during execution.

Controlling readers via period variables

As described in the Transformation tab section, the reader assumes that the structure of the file volition non change after endmost the node dialog. However when a reader is controlled via a flow variable the resulting tabular array specification can change. This happens if settings that bear on which data should be read are changed via a menses variable. One case is the reading of several CSV files with unlike columns in a loop where the path to the file is controlled by a catamenia variable.

During configuration the node checks if the settings have changed. If that is the case the node volition not return any table specification during configuration and will analyze the new file construction with each execution. In doing then it will use the transformations to the new discovered file construction using the <whatsoever unknown new cavalcade> and Enforce types choice.

| Currently the new reader nodes but detect changes of the file path. Other settings via flow variables that might influence the structure such as a different canvas name in the Excel Reader or a different column separator in the CSV Reader are not monitored with the current version. We are aware of this limitation and plan to change this with KNIME Analytics Platform four.4. Until so yous need to enable the Support changing file schemas option which is described in the adjacent department if you run across this problem. |

Reading the same data files with changing structure

As described in the Transformation tab department, the reader assumes that the structure of the file will not change subsequently endmost the node dialog. Still the structure of the file can modify (e.yard. by adding additional columns) if it gets overwritten. In this case you need to enable the Support changing file schemas option on the Avant-garde Settings tab. Enabling this option forces the reader to compute the table specification during each execution thus adapting to the changes of the file structure.

| The Back up changing file schemas option will disable the Transformation tab and the node will not render any tabular array specification during configuration. |

Append a path column

In the Advanced Settings tab you can besides bank check the option Append path column under Path column pane. If this pick is checked, the node will append a path column with the provided proper name to the output table. This cavalcade will contain for each row which file it was read from. The node will neglect if calculation the column with the provided name causes a name collision with any of the columns in the read table. This will permit you to and so distinguish the file from which a specific row is read from in case you are reading multiple files and are concatenating them into a unmarried data table.

Author nodes

Also Writer nodes can be used in KNIME Analytics Platform to work inside the File Treatment Framework.

You can use these Writer nodes with both Standard file systems and Continued file systems. Moreover, using the File System Connexion port you can easily switch the connectedness between different connected file systems.

An output File System Connection port can exist added to Writer nodes and this volition allow them to be easily connected to different file systems and will be able to write persistently files to them.

In the following instance a CSV Writer node with a File Arrangement Connexion port is connected to an Azure Blob Storage Connector node and it is able to write a .csv file to an Azure Hulk Storage connected file system. A CSV Reader node read a .csv file from a SharePoint Online File Organisation, information is transformed, and the resulting data is written to the Azure Blob Storage file arrangement.

Image Writer (Table) node

The Epitome Author (Table) node is also available with a table based input.

Yous can requite to this node an input data table where a column contains, in each data cell, images. Image Writer (Table) is able to process the images contained in a column of the input data tabular array and write them as files in a specified output location.

Path data prison cell and flow variable

Files and folders can exist uniquely identified via their path within a file system. Within KNIME Analytics Platform such a path is represented via a path type. A path blazon consists of three parts:

-

Type: Specifies the file system blazon due east.thou. local, relative, mountpoint, custome_url or connected.

-

Specifier: Optional string that contains additional file system specific information such as the location the relative to file system is working with such equally workflow or mountpoint.

-

Path: Specifies the location inside the file arrangement with the file arrangement specific notation due east.g.

C:\file.csvon Windows operating systems or/user/home/file.csvon Linux operating systems.

Path examples are:

-

Local

-

(LOCAL, , C:\Users\username\Desktop) -

(LOCAL, , \\fileserver\file1.csv) -

(LOCAL, , /dwelling/user)

-

-

RELATIVE

-

(RELATIVE, knime.workflow, file1.csv) -

(RELATIVE, knime.mountpoint, file1.csv)

-

-

MOUNTPOINT

-

(MOUNTPOINT, MOUNTPOINT_NAME, /path/to/file1.csv)

-

-

CUSTOM_URL

-

(CUSTOM_URL, , https://server:443/my%20example?query=value#frag) -

(CUSTOM_URL, , knime://knime.workflow/file%201.csv)

-

-

CONNECTED

-

(Connected, amazon-s3:eu-west-one, /mybucket/file1.csv) -

(Continued, microsoft-sharepoint, /myfolder/file1.csv) -

(CONNECTED, ftp:server:port, /dwelling/user/file1.csv) -

(Connected, ssh:server:port, /home/user/mybucket/file1.csv) -

(CONNECTED, http:server:port, /file.asp?key=value)

-

A path type tin be packaged into either a Path Data Prison cell or a Path Menses Variable. By default the Path Data Cell inside a KNIME data table just displays the path role. If you desire to display the full path you can change the prison cell renderer via the context carte of the table header to the Extended path renderer.

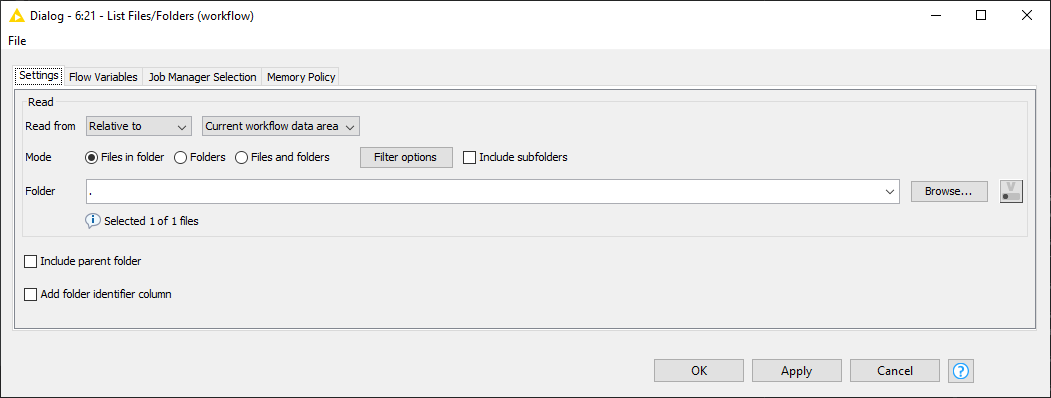

Creating path data cells

In order to work with files and folders in KNIME Analytics Platform you lot can either select them manually via the node configuration dialog or y'all might want to list the paths of specific files and/or binder. To practice this you can use the Listing Files/Folders node. Simply open the dialog and point it to the binder whose content you want to listing. The node provides the following options:

-

Files in folder: Will return a list of all files within the selected folder that friction match the Filter options.

-

Folders: Will return all folders that have the selected folder equally parent. To include all sub folders you accept to select the Include subfolders option.

-

Files and folders: Is a combination of the previous two options and will return all files and folders inside the selected folder.

Manipulating path data cells

Since KNIME Analytics Platform version 4.4 you lot can too employ the Cavalcade Expressions node which supports now the Path type data cells. This node provides the possibility to append an arbitrary number of columns or modify existing columns using expressions.

Creating path flow variables

There are two ways to create a path period variable. The first style is to consign it via the dialog of the node where you specify the path. This could be a CSV Author node for instance where you desire to export the path to the written file in order to consume it in a subsequent node. The 2d manner is to convert a path data cell into a flow variable past using one of the available variable nodes such as the Table Row to Variable or Table Row to Variable Loop Kickoff node.

String and path blazon conversion

Until at present not all nodes that work with files have been converted to the new file handling framework and thus do non back up the path type. These nodes require either a String or URI data prison cell or a cord menstruum variable.

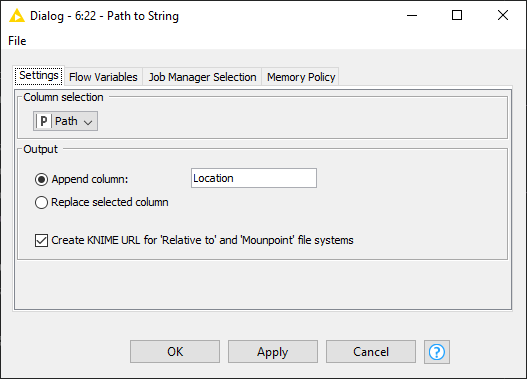

From path to string

The Path to Cord node converts a path information prison cell to a cord data cell. Past default the node volition create a String representation of the path that can be used in a subsequent node that yet requires the old cord or URI type, east.g. JSON Reader.

| You lot can download this example workflow from KNIME Hub. |

If yous only want to extract the plain path you can disable the _Create KNIME URL for 'Relative to' and 'Mountpoint' file arrangement option in the Path to Cord node configuration dialog.

Similar to the Path to Cord node, the Path to Cord (Variable) node converts the selected path menstruum variables to a string variable.

If yous desire to use a node that requires a URI cell you lot can employ the String to URI node subsequently the Path to Cord node.

From string to path

In gild to catechumen a cord path to the path blazon you can use the String to Path node. The node has a dynamic File System port that yous demand to connect to the respective file system if you want to create a path for a continued file system such as Amazon S3.

Like to the Cord to Path node the Cord to Path (Variable) node converts a cord catamenia variable into a path catamenia variable.

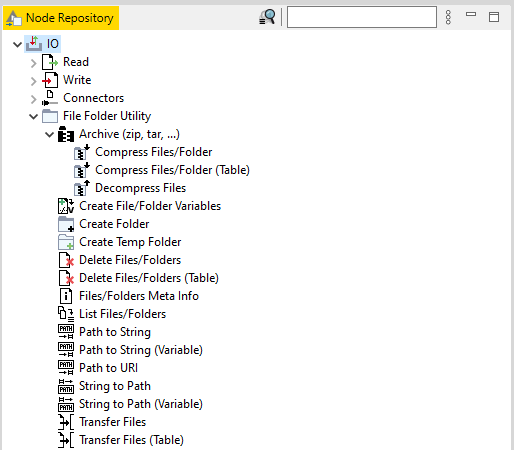

File Folder Utility nodes

With the introduction of the new file handling framework with KNIME Analytics Platform release 4.3, we besides updated the functionality of the utility nodes.

Y'all can find the File Folder Utility nodes in the node repository nether the category IO > File Binder Utility.

You tin can add together a dynamic port to connect to a continued file arrangement directly with the File Folder Utility nodes. In this way yous can easily work with files and folders in any file system that is available.

In the instance below the Transfer Files node is used to connect to two file systems a source file organization, Google Drive in this case, and a destination file system, SharePoint Online in this example, to easily transfer files from Google Drive to SharePoint Online.

Table based input Utility nodes

Some of the File Binder Utility nodes are also bachelor with a table based input. Those are the once that take (Table) in their proper noun, e.1000. Compress Files/Folder (Tabular array), Delete Files/Folders (Table), and Transfer Files (Table) nodes. You tin can give to these nodes an input data table where a cavalcade contains, in each data cell, Paths to files and these are processed by the File Folder Utility (Table) nodes.

For instance, with Shrink Files/Golder (Tabular array) and Delete Files/Folder (Table) nodes you tin can respectively compress or delete the files whose Paths are listed in a cavalcade of the input data tabular array. With Transfer Files (Table) you can transfer all the files whose Paths are listed in a column of the input information table.

| Since the new utility nodes (e.1000. Compress Files/Folder, Delete Files/Folders and Transfer Files) do not provide the full functionality of the old nodes still, as of now the nodes, including the required connector nodes for the different file systems, are still available just marked every bit (legacy). You can detect them in the node repository under the IO/File Handling (legacy) category. They volition be deprecated once the new nodes have the full functionality. If you are missing some functionality in the new nodes please let u.s.a. know via the KNIME Forum. |

Compatibility and migration

With the iv.3 release of the KNIME Analytics Platform we introduced the new file handling framework. The framework requires a rewrite of the existing file reader and writer nodes besides as the utility nodes. Notwithstanding not all nodes provided by KNIME or the Community have been migrated nonetheless which is why we provide this section to help you to work with one-time and new file handling nodes side by side.

How to work with workflows that incorporate both old and new file handling nodes

How to distinguish between the old and new file treatment nodes

You lot can identify the new file handling nodes past the three dots for the dynamic port on the node icon that allow you lot to connect to a continued file arrangement.

Some other way to identify a new file handling node is by the Input location department in the node configuration dialog. Here, for nodes that accept been migrated to the new file handling framework you will typically discover a drib-down menu that allows y'all to specify the file system that should be used. The following image shows the Input location section of the configuration dialog of a typical reader node in the new and sometime file handling framework.

Working with old and new menses variables and data types

Using flow variables yous can specify the location of a file to read or write automatically. Because not all nodes provided by KNIME or the Community have been migrated yet you might face the problem that the newer file nodes support the new Path type whereas older nodes nonetheless back up a string catamenia variable which tin exist either a file path or a URI.

The List Files/Folders node returns a table with a list of files/folders in the new Path type. To use the Path type in a node that has not been migrated withal (e.chiliad. in the Tabular array Reader), you lot need to first convert the new Path blazon using the Path to String node into a string.

Some older nodes require a URI as input. To convert the Path type to an URI yous first need to convert it to a string using the Path to Cord node and and so convert the string to an URI using the String to URI node.

If you already have a Path type flow variable yous can use the Path to String (Variable) node to convert the Path type period variable into a string menstruum variable.

If you want to catechumen an existing file path or string to the new Path type you lot can either us the String to Path node to catechumen data columns or the String to Path (Variable) node to catechumen string variable.

How to migrate your workflows from old to new file treatment nodes

This section should help you lot to drift your workflows from the old file treatment framework to the new file handling framework. The new framework provides lots of advantages over the old framework which come at the cost of some changes in the usage which nosotros will accost hither.

In order to standardize the node names and better capture the functionality of the bodily node we have renamed some of the nodes. In addition we take besides removed some nodes whose functionality has been integrated into some other node such as the Excel Writer which replaces the original Excel Writer (XLS) too equally the Excel Sheet Appender node.

| Node Icon | 4.2 Old | 4.iii New | |

|---|---|---|---|

| | Zip Files | → |

|

| | Unzip Files | → | Decompress Files |

| | Create Directory | → | Create Folder |

| | Delete Files | → |

|

| |

| → |

|

| |

| → | List Files/Folders |

| | Create Temp Dir | → | Create Temp Binder |

| | Connection (east.m. SSH Connection) | → | Connector (e.g. SSH Connector) |

| | Excel Reader (XLS) | → | Excel Reader |

| | Read Excel Canvass Names (XLS) | → | Read Excel Canvass Names |

| |

| → | Excel Writer |

| | File Reader | → |

|

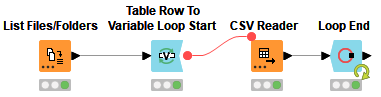

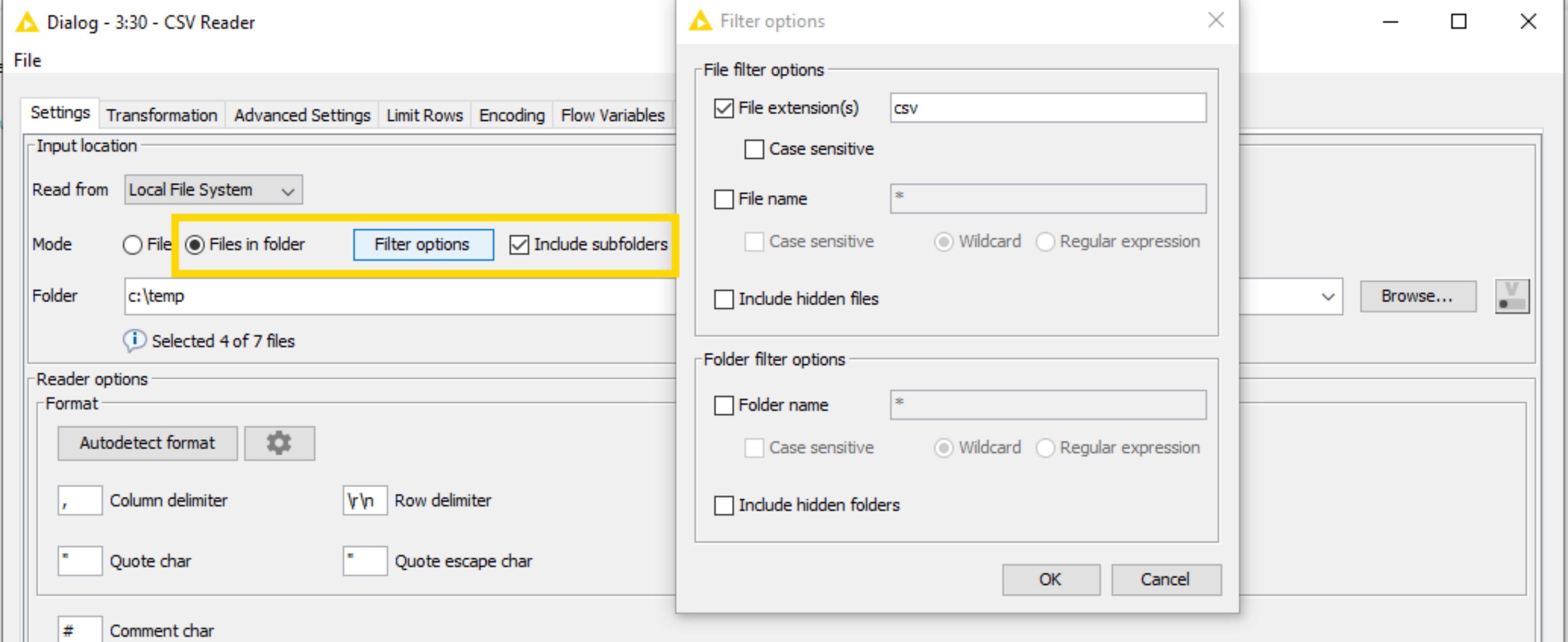

Reading multiple files into a unmarried KNIME data tabular array

Using the old file handling framework you had to use loops in order to read multiple files into a unmarried KNIME data table. Then your workflows looked like the post-obit image.

With the new file handling framework you no longer need to use loops since the reading of multiple files into a single KNIME information tabular array is at present built into the reader nodes them self. All y'all have to do is to change the reading mode to Files in Folder. Y'all tin than open the Filter options dialog by clicking on the Filter options button. Here you can filter the files that should be considered e.grand. based on their file extension. In improver you can specify if files in subfolders should be included or non.

| If you keep to use a loop to read multiple files and encounter problems during the execution have a look at the Decision-making readers via menstruum variables section. |

Reading from or writing to remote file systems

With the old file handling framework, when working with remote file systems such as Amazon S3 or HDFS you had 2 options when reading files:

-

Download the file to a local folder and then bespeak the reader node to the local copy

-

If bachelor, use ane of the File Picker nodes (due east.grand. Amazon S3 File Picker, Azure Blob Store File Picker or Google Cloud Storage File Picker) to create a signed URL to pass into the reader node.

In gild to write a file to a remote file system you lot had to start write the file to your local hard drive and and then upload it to the remote file organisation.

With the new file handling framework you no longer need to utilize any additional nodes to work with files in remote file systems simply simply connect the reader and author nodes to the corresponding connector node via the dynamic ports.

Working with KNIME URL

When working with the nodes of the new file handling framework you no longer demand to create KNIME URLs merely can use the built-in convenience file systems Relative to and Mountpoint. The post-obit table lists the relation between the KNIME URL and the two new file systems.

| KNIME URL | User-friendly file system |

|---|---|

| knime://knime.node/ | No direct replacement due to security reasons only check out Relative to → Current workflow data area |

| knime://knime.workflow/ | Relative to → Electric current workflow* |

| knime://knime.mountpoint/ | Relative to → Current mountpoint |

| knime://<mountpoint_name>/ | Mountpoint → <mountpoint_name> |

| Due to security reasons KNIME workflows are no longer folders but considered as files. And then you can no longer admission data within a workflow directory except for the newly created workflow information area. |

If yous need the KNIME URL for a Relative to or Mountpoint path you can use the Path to String and Path to String (Variable) nodes with the Create KNIME URL for 'Relative to' and 'Mountpoint' file systems option enabled, every bit it is by default.

| We encourage yous to use the new convenience file systems Relative to and Mountpoint in favor of the KNIME URLs. For more details on how to convert from the new file systems to the erstwhile KNIME URLs see the Working with old and new flow variables section. |

Excel Appender

The functionality of the Excel Appender node has been integrated into the new Excel Writer node. The node allows you to create new Excel files with one or many spreadsheets but likewise to append whatsoever number of spreadsheets to an existing file. The number of sheets to write can exist changed via the dynamic ports option of the node.

File Reader and File Reader (Circuitous Format) nodes

With KNIME Analytics Platform release four.4.0 we:

-

Improved the File Reader node

-

Introduced a new File Reader (Circuitous Format) node

The File Reader node now supports the new File Treatment framework and can use path flow variables and connect to file systems. The File Reader node is able to read the near common text files.

The newly introduced File Reader (Complex Format) node as well supports the new File Handling framework, and information technology is able to read complex format files. We recommend to utilise the File Reader (Complex Format) node only in case the File Reader node is not able to read your file.

With the File Reader node you can also enable the Support irresolute file schemas choice in the Advanced Settings tab of its configuration dialog. This allows the node to support eventual input file structure changes between different invocations. This is instead not possible with the File Reader (Circuitous Format) node that does non back up files changing schemas. Thus, if the File Reader (Complex Format) node is used in a loop, y'all should make sure that all files accept the same format (e. m. separators, column headers, column types). The node saves the configuration only during the kickoff execution. Alternatively, the File Reader node tin can exist used.

garvanhicusay1956.blogspot.com

Source: https://docs.knime.com/2021-06/analytics_platform_file_handling_guide/index.html